We use big-O notation to describe the growth of functions. In the case of algorithms, we use it to calculate the temporal complexity of an algorithm with respect to the algorithm’s input.

We use big-O notation in algorithms, to calculate the theoretical temporal complexity of an algorithm.

The main advantage of the big-O notation is that it is independent of a certain implementation, programming languages, and hardware. This allows us to compare two algorithms, according to their efficiency, without the need to implement them or even having to use a computer.

Big-O notation is a topic from mathematics that we borrow in Computer Science (like many other topics). So, as we always do in mathematics, let’s start with the definition.

Big-O definition

Definition: “Let f and g be functions from the set of integers or the set of real numbers to the set of real numbers. We say that f (x) is O(g(x)) if there are constants C and k such that |f (x )| ≤ C|g(x)| whenever x > k. [This is read as “f (x) is big-oh of g(x).”] ”. Source: Discrete Mathematics and its Applications By Rosen.

From this definition, we can infer that f(x) grows more slowly than a certain multiple of g(x).

We call the constants C and k witnesses. So, for you to prove that f(x) is O(g(x)), all you need to do is to find the witnesses C and k.

In Computer Science, we use two properties of Big-O to calculate the temporal complexity of algorithms without the need to find C and k.

Big-O graphical definition

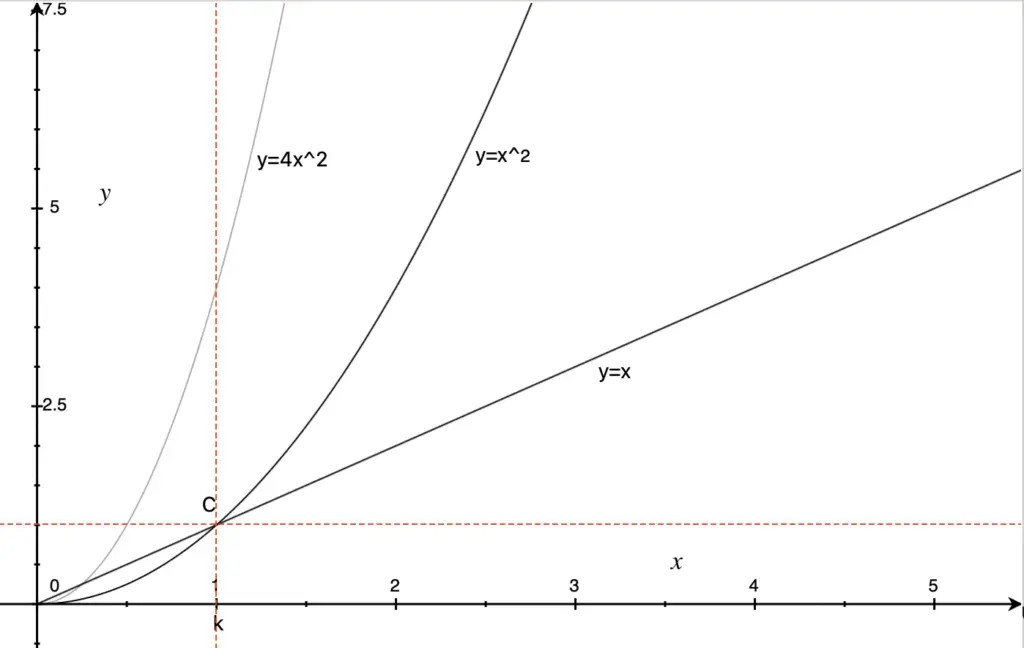

Let’s see this definition graphically. Let’s create the graph for the functions f(x)=x, g(x)=x2, and h(x)=4x2.

From the figure above, you can see that x is O(x2). Also, 4x2 is O(x2), and x is O(4x2).

Now that you understand the definition of big-O, let’s see the properties that we use to determine the temporal complexity of an algorithm.

These two properties can be proven by using the definition of big-O (I’ll leave the proof to the reader):

- Sum rule: if f1 is O(g(x)) and f2 is O(h(x)) ⇒ (f1 +f2)(x) is O(max(|g(x)|,|h(x)|))

- Product rule: if f1 is O(g(x)) and f2 is O(h(x)) ⇒ (f1 * f2)(x) is O( g(x) * h(x) )

How is this useful for us?

Algorithms are a finite sequence of steps that, when executed in a certain order, solve a specific problem.

By using the two rules above, we can determine the temporal complexity of a whole algorithm. We first calculate the temporal complexity of single steps and combine them using the sum and product rule.

In the next post, I give you some examples of how these two rules are applied to calculate the temporal complexity of iterative algorithms.